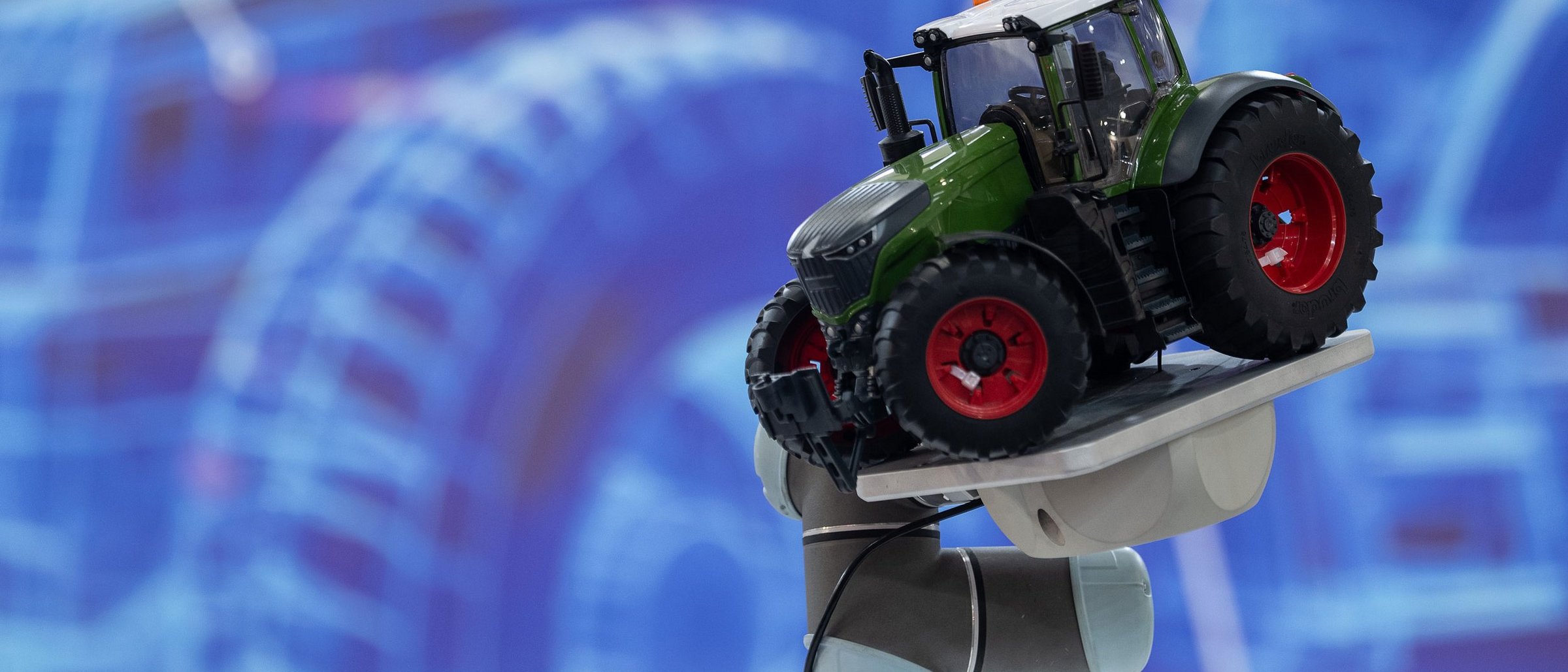

Intuitive Human Machine Interfaces in off-highway use ensure greater productivity and enhance safety

At the SYSTEMS & COMPONENTS 2025 event, it will be clear that increasing connectivity and automation in the off-highway sector is influencing the interaction between man and machine: the “ideal” human-machine interface (HMI) supports the driver, providing the right information at the right time. Camera and radar systems, which will be presented in Hanover from November 9 to 15, not only facilitate all-round visibility but also increase safety. Augmented reality (XR) is now increasingly applied to assistance systems and HMI solutions for mobile machinery.

“Mobile machinery must be able to work reliably in a harsh and constantly changing environment. The machinery performs tasks that demand the operator's undivided attention,” says Petra Kaiser, Brand Manager from the DLG (German Agricultural Society), organizer of SYSTEMS & COMPONENTS. Autonomous functions are therefore an enhanced support for operators. “These functions reduce work load, while at the same time the machine operator retains control,” says Kaiser, the leading meeting place for the international supplier industry for the off-highway sector. This year’s guiding theme of SYSTEMS & COMPONENTS is “Touch Smart Efficiency”.

The wide variety of different machines in the agricultural and construction industries leads to a high demand for modular and flexible solutions. “This means that new functions for the autonomous operation of a machine can be added piece by piece. This development is also reflected at the exhibition grounds in Hanover,” says Kaiser, giving an outlook on the content of the B2B marketplace, which will once again take place as part of the world's leading trade fair for agricultural machinery, AGRITECHNICA.

Photo: DLG

Reliable under harsh conditions

If the trends in the off-highway sector are transferred to the system architecture of a mobile machine, this means that more and more data from an increasing number of sensors must be processed. Ultimately, this information should be presented to the operator in an intuitive way. Smart and effective HMI technologies are essential for this. They keep the driver up to date with the current situation during operation. “A new type of communication between man and machine is required, in which the vehicle also increasingly takes on an active role,” explains Kaiser. In other words, the vehicle helps the driver to understand the situation, enhances safety and provides all the necessary information without overwhelming them. At SYSTEMS & COMPONENTS, numerous HMI solutions will focus on the continuous feedback on important operating data such as speed, fuel consumption, crop yield and machine status. This makes it possible to make informed decisions in the cockpit and work more efficiently.

Mobile machines are increasingly being controlled remotely. In these cases, it is a particular challenge for human operators to maintain an overview of the machine and its surroundings. The latest developments therefore focus on extended reality (XR) technologies. The digital extension of the human sensory organs is intended to take interaction with the machine to a new level. For Christiana Seethaler, Vice President Product Development at TTControl, the integration of XR technologies into HMI products is the “next step towards creating smarter, more reliable off-highway machines.” The principle behind it: XR technologies show the machine status in the driver's immediate field of vision, haptic feedback can send tangible signals, for example via a joystick. In addition, acoustic signals draw attention to potentially dangerous situations.

Making work machines “tangible” for people

In combination with external sensors, such XR tools can help to identify obstacles and other influencing factors in the environment. This not only improves safety, but also increases efficiency through predictive working. However: “XR technology must be intuitive enough to enable low-threshold use,” explains Martijn Rooker, Innovation Projects & Funding Manager at TTControl. “That's why we are applying a human-centered, scenario-based co-design approach in the TheiaXR project. This means that we incorporate experiences from real operators and industrial companies to ensure that all solutions developed are as intuitive and user-friendly as possible,” says Rooker, who is coordinating the European research project.

The aim is to make the “invisible” visible and to digitally enhance the perception of operators in the cockpit without restricting their ability to perform the job. The consortium consists of 11 partners, including TU Dresden, TU Graz, the University of Luxembourg and the Finnish research institute VTT on the scientific side. TheiaXR is currently being tested in three scenarios in which mobile machines are used: Snow and slope grooming, logistics and construction. The aim is to find a solution to depict gas pipes and power cables using virtual reality, as these are often destroyed during construction work. The technology could be used to display information on the depth and route of such lines in the machine operator's field of vision, for example by projecting it onto the cockpit windshield.

Sensor fusion contributes to greater reliability

Strong environmental sensor technologies not only provide an important building block for the automation of mobile machinery – they also could make a contribution to safety. Consequently, solutions for accident prevention are a key focus of SYSTEMS & COMPONENTS. The technology suppliers are confronted with hugely different environmental conditions. Strong contrasts, backlighting and twilight pose major challenges for camera systems. This is one reason why several sensor systems such as LiDAR, ultrasound or radar are used in combination with RGB or infrared cameras. The process of combining and comparing sensor data coming from disparate sources is known as sensor fusion. The application increases the reliability of the resulting commands of the assistance systems. This can allow work cycles to be carried more effectively and more safely, which ultimately saves time and costs.

Photo: DLG

Modern ultrasonic systems with up to twelve sensors enable a 360-degree all-round view of the machine. Depending on the configuration, the sensors measure only the pure distance or also offer object localization. An algorithm uses triangulation to determine the position of the object in the detection area. Safety certification in accordance with ISO 25119 enables this type of solution to be integrated into a higher-level system for environment detection, which must meet the functional safety requirements for agricultural machinery and tractors. This includes, for example, approach controls or emergency braking functions for slow-moving machines.

Collision warning for more precise maneuvering

One example of sensor fusion and the resulting increased performance of assistance systems is the Collision Avoidance System (CAS) from Bosch Rexroth. Using radar, ultrasound and smart camera sensing, the CAS system continually monitors the environment and warns the operator of excavator, wheel loader and other machines of any impending collisions by vibrating the joystick. The type and intensity of the haptic feedback provides information about the distance to the object. If the machine is operated with two joysticks, the system can communicate the direction from which the object is approaching by vibrating the respective joystick.

In contrast to purely visual or acoustic feedback, attention remains almost fully focused on the work process, which saves important reaction time. Even with loud ambient noise and without looking at the display, the warning is delivered directly to the driver's hands. For higher automation levels, there is also the option of automatic brake intervention via an electro-hydraulically controlled power brake valve - if there is no timely reaction to the warning, the required brake pressure is automatically created.

Assistance functions for greater productivity

Haptic collision warning is just one example of how modular assistance systems can contribute to enhancing safety in the working environment. While this type of information is still predominantly provided by the cameras and sensors that are mounted on the machine itself, in future data generated by the environment, for example by other vehicles, robots and drones, could be combined into an intuitive situational picture for the operator. The “Off Highway Twins 2” (OHT-2) project, which is based in Germany, takes up this approach. By fusing geodata from the cloud with sensor and telemetry data using modeling, sensor data fusion and AI, comprehensive and detailed models of infrastructure objects and their surroundings, known as off-highway twins, are to be derived in real time. For example, it would be possible to learn how much material an excavator has moved – detectable from the movement and drive data – and note directly in the terrain modelling where the excavation has taken place.

“The conceivable scenarios and the added value of such information are key to increasing productivity for various areas of application in the agricultural and construction industries,” emphasizes Kaiser. All of this shows: “Autonomous technologies and assistance systems, such as those on display in Hanover, Germany, from November 9 to 15, score points through improved processes, more precise work and contribution to enhanced safety. Work processes in the off-highway environment can be precisely planned and coordinated,” says the SYSTEMS & COMPONENTS Brand Manager.